I am a Ph.D. student in the Department of Computer Science & Engineering, Lehigh University, where I am advised by Prof. Lichao Sun. I was a research intern at the AI&I Department, Mayo Clinic  , where I am developing vision-language pre-training and federated learning algorithms for electronic health records (EHR).

, where I am developing vision-language pre-training and federated learning algorithms for electronic health records (EHR).

My recent research focuses on Multi-modal and Federated Learning for Biomedicine, as well as efficient machine/deep learning system design, with the goal of Accelerating Discovery to Delivery for Clinical Excellence. Ultimately, my career goal is to develop trustworthy and reliable artificial general intelligence (AGI) systems for computational precision medicine and health.

I have published approximately 20 research papers with total

🔥 News

- 2024.10: 🔥🔥TTT-Unet is accepted by AIM-FM Workshop @ NeurIPS’24!

- 2024.10: 🔥🔥Biomedical SAM 2 is accepted by AIM-FM Workshop @ NeurIPS’24!

- 2024.10: 🔥🔥A Comprehensive Survey on Pretrained Foundation Models: A History from BERT to ChatGPT is accepted by International Journal of Machine Learning and Cybernetics!

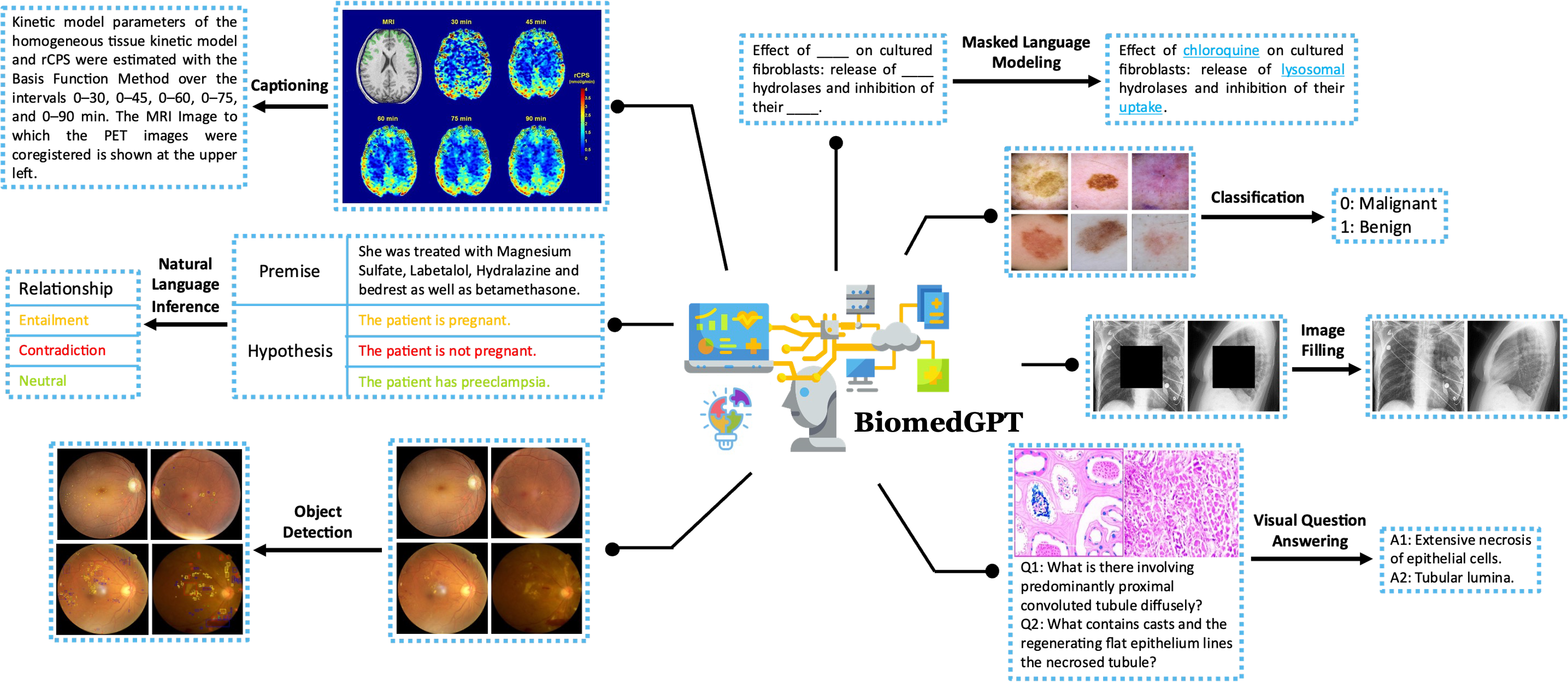

- 2024.07: 🔥🔥BiomedGPT: A generalist vision–language foundation model for diverse biomedical tasks is accepted by Nature Medicine!

- 2024.05: 🔥🔥FedMLSecurity: A Benchmark for Attacks and Defenses in Federated Learning and LLMs is accepted by KDD!

- 2024.01: 🎉🎉 I will join

in Princeton, NJ as a Research Intern during summer, working on Multimodal Large Language Model for Healthcare!

<!–

in Princeton, NJ as a Research Intern during summer, working on Multimodal Large Language Model for Healthcare!

<!– - 2023.07-10: We conduct several evaluations of large foundation models for healthcare, including Holistic evaluation of GPT-4V for biomedical imaging, primary outcomes of GPT-4V for radiology and pathology VQA, and LLM for radiology NLP.

- 2023.06: We release FedMLSecurity: A Benchmark for Attacks and Defenses in Federated Learning and LLMs!

- 2023.05: The first preprint of BiomedGPT: A Unified and Generalist Biomedical Generative Pre-trained Transformer for Vision, Language, and Multimodal Tasks is released. We are still working on it!

- 2023.04: 🎉🎉 I will join

in San Diego as a Applited Scientist Intern during summer. See you in California!

in San Diego as a Applited Scientist Intern during summer. See you in California! - 2023.03: The first preprint of FeDepth is released.

- 2023.02: The first preprint of A Comprehensive Survey on Pretrained Foundation Models: A History from BERT to ChatGPT is released. We are still working on it!

- 2023.01: 🎉🎉 I join

Mayo ADVANCE Lab as a Bioinformatics Intern.

Mayo ADVANCE Lab as a Bioinformatics Intern. - 2022.10: FedR is accepted by EMNLP 22.

- 2022.09: 🎉🎉 My intern at Samsung Research America is extended to fall semester!

- 2022.08: 🔥🔥Adversarial Attack and Defense on Graph Data: A Survey is accepted by TKDE.

- 2022.06: I’am honored to be the PC Member of FedGraph2022 @ CIKM22. We need your contributions!

- 2022.05: 🎉🎉 I work as Summer Research Intern for Knox - AI/Mobile/Privacy @

.

. - 2022.02: I’am honored to be invited to present at WSDM’22 FL4P-WSDM Workshop.

–>

📝 Publications

BiomedGPT: A Generalist Vision-Language Foundation Model for Diverse Biomedical Tasks

Kai Zhang, Rong Zhou, Eashan Adhikarla, Zhiling Yan, Yixin Liu, Jun Yu, Zhengliang Liu, Xun Chen, Brian D. Davison, Hui Ren, Jing Huang, Chen Chen, Yuyin Zhou, Sunyang Fu, Wei Liu, Tianming Liu, Xiang Li, Yong Chen, Lifang He, James Zou, Quanzheng Li, Hongfang Liu, Lichao Sun

- TL;DR: We introduce a unified and generalist Biomedical Generative Pre-trained Transformer (BiomedGPT) model, which leverages self-supervision on large and diverse datasets to accept multi-modal inputs and perform a range of downstream tasks.

- Source Code: BiomedGPT

.

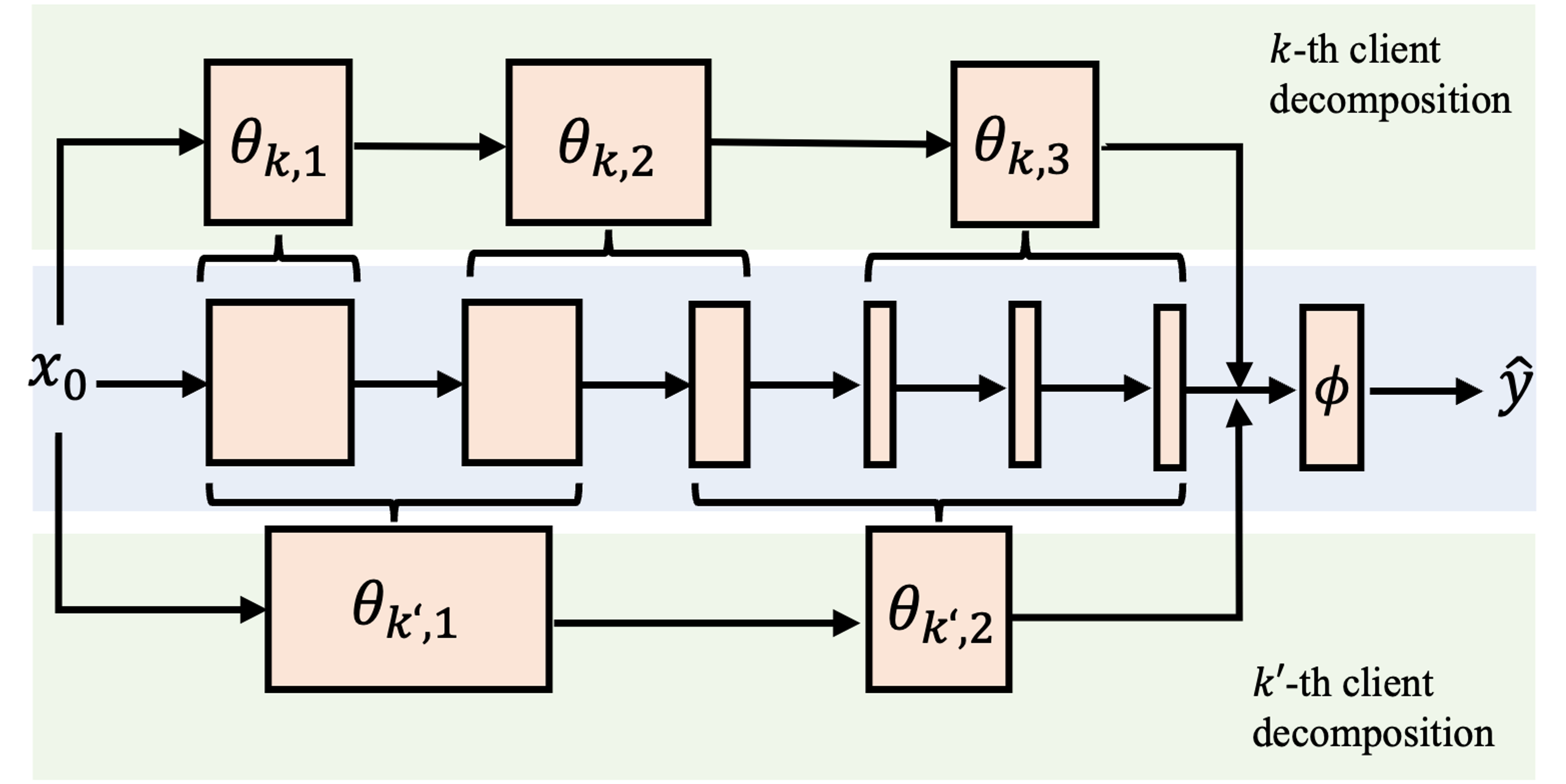

Memory-adaptive Depth-wise Heterogenous Federated Learning

Kai Zhang, Yutong Dai, Hongyi Wang, Eric Xing, Xun Chen, Lichao Sun

- TL;DR: We introduce a memory-adaptive depth-wise learning solution in FL, which adaptively decomposes the full model into blocks according to the memory budgets of each client and trains blocks sequentially to obtain a full inference model.

- Source Code: FeDepth

.

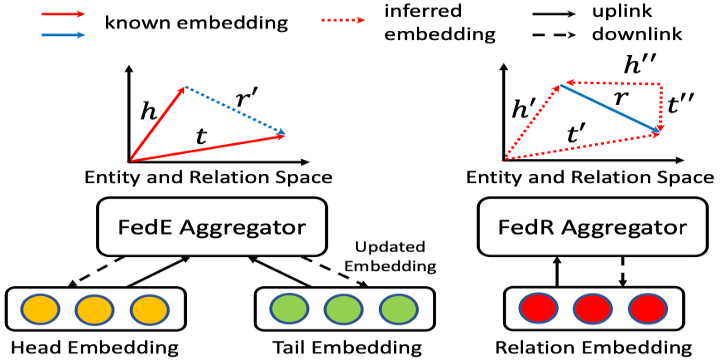

Kai Zhang, Yu Wang, Hongyi Wang, Lifu Huang, Carl Yang, Xun Chen, Lichao Sun

- TL;DR: Our framework allows for sharing entity embeddings of knowledge graphs across multiple clients while protecting privacy to prevent any potential leakage.

- Source Code: FedR

.

Adversarial Attack and Defense on Graph Data: A Survey, Lichao Sun, Yingtong Dou, Carl Yang, Kai Zhang, Ji Wang, Philip S. Yu, Lifang He, Bo Li